Engineers and computer scientists are working with neuroscientists and health care professionals around the world to develop advanced devices, including robotics and prosthetics, that interface closely with the brain and the body. These advanced tools may be used by people with physical disabilities, people who work in hazardous environments, or people who need to extend their capabilities for difficult tasks.

Areas of interest include exoskeletons (which augment the abilities of existing limbs), prosthetics (which offer functionality by replacing lost appendages, replacing lost sensory modalities such as with hearing devices, or replacing lost movement and communication with brain-computer interfaces), and supernumerary robots (which may attach to the human body and may work in coordination with existing natural limbs). It is now well established that as people learn to use new prosthetic tools, both their brain and their body change in fundamental ways. Thus, neuroengineering for prosthetics and human-machine interfaces addresses:

- Robotics and prosthetics design principles that allow new devices to work seamlessly with human form and function, sometimes in the presence of disability.

- Co-adaptation of the human brain/body, with machine-based adaptation (machine learning, adaptive control, deep learning, etc.) such that the machine and brain are adapting in parallel.

- Human-machine interface (HMI) design (including brain-computer interfaces) in which humans control machines, but the machines may assume some functionality at various levels in the control hierarchy.

- Telepresence, in which the brain/body of a user may be in one physical location, but the physical task to be completed resides in the different physical location.

Challenge

Approaches

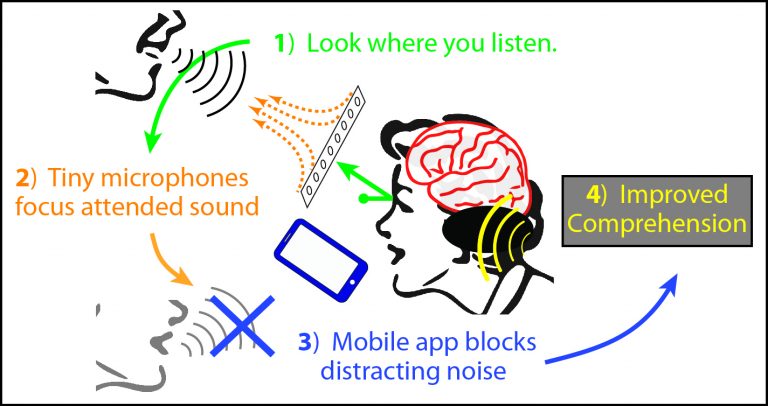

- Advanced Hearing Devices that Work Like the Brain

- Supernumerary Robotic Limbs that Connect to the Human Body and Coordinate with Natural Limbs

- Prosthetic limbs incorporating smart sensing and sensory/haptic feedback

- Use brain-computer interfaces to read out speech and movement intent

Contributors

- David Brandman (Neurological Surgery, School of Medicine)

- Wilsaan Joiner (Neurobiology, Physiology, and Behavior, College of Biological Sciences; Neurology, School of Medicine)

- Sanjay Joshi (Mechanical and Aerospace Engineering, College of Engineering)

- Zhaodan Kong (Mechanical and Aerospace Engineering, College of Engineering)

- Craig McDonald (Physical Medicine and Rehabilitation, School of Medicine)

- Lee Miller (Neurobiology, Physiology, and Behavior, College of Biological Sciences; Center for Mind and Brain; Cognitive Science Program)

- Karen Moxon (Biomedical Engineering, College of Engineering)

- Carolynn Patten (Physical Medicine and Rehabilitation, School of Medicine; Neurobiology, Physiology, and Behavior, College of Biological Sciences)

- Stephen Robinson (Mechanical and Aerospace Engineering, College of Engineering)

- Jonathon Schofield (Mechanical and Aerospace Engineering, College of Engineering)

- Sergey Stavisky (Neurological Surgery, School of Medicine)

- Mitch Sutter (Neurobiology, Physiology, and Behavior, College of Biological Sciences; Center for Neuroscience)

- Erkin Şeker (Electrical and Computer Engineering, College of Engineering)